Good. In part 1 we saw a few examples of what was possible with the API. In this part I will try to cover why I think the hype on the API is more justified than the hype on AI.

Once again I will prove it with examples of my experiments. I tried to explore what knowledge the API already had. The following examples are not examples of learning. It’s more of what the API was capable of.

Experiment 4:

Title: Location information.

Result:

Analysis:

Surprise. It gave the right answer for most queries. So it has already scrapped and stored the location information of a lot of places. I didn’t stress test it, but I am pretty sure it has most of the information.

Experiment 4.1:

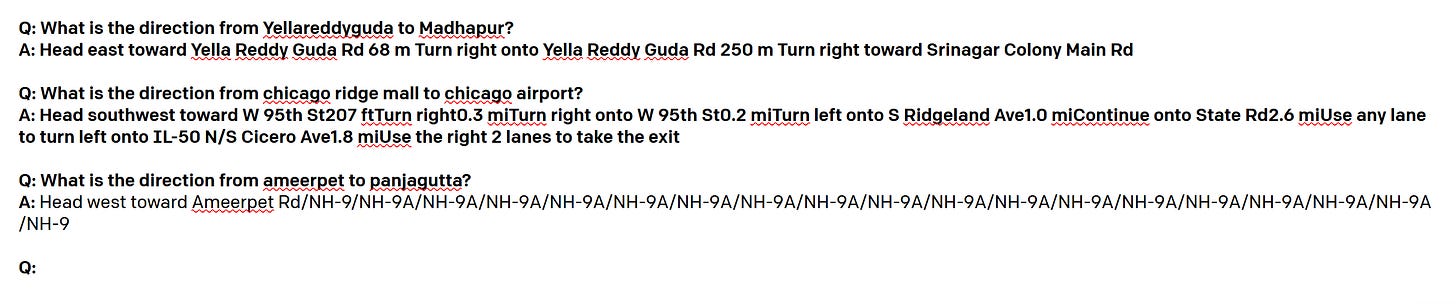

Title: Directions

Result:

Analysis:

Damn. It does not have this information. Maybe I was expecting too much :)

Experiment 4.2:

Title: Contact Details

Result:

Analysis:

Pretty good results. It got most of the US companies which are well known right. Not so much for Indian companies. But still pretty cool. So now I have an API to get the contact details of companies. It did not work for emails though :)

Experiment 4.3:

Title: Movie Details

Result:

Analysis:

Mind blowingly good results. A little expected as I am sure IMDB and other sources would have been parsed. But still getting the relationships, actor names etc. This is going to be my interface to movie trivia going forward. I mean, Netflix etc should just use this at the backend and power natural language search queries.

That’s it for part 2. In the next part I will cover the experiments where GPT-3 really shined.

Thanks a lot for this amazing article series. I am looking forward to trying some of these once I get the GTP-3 API access