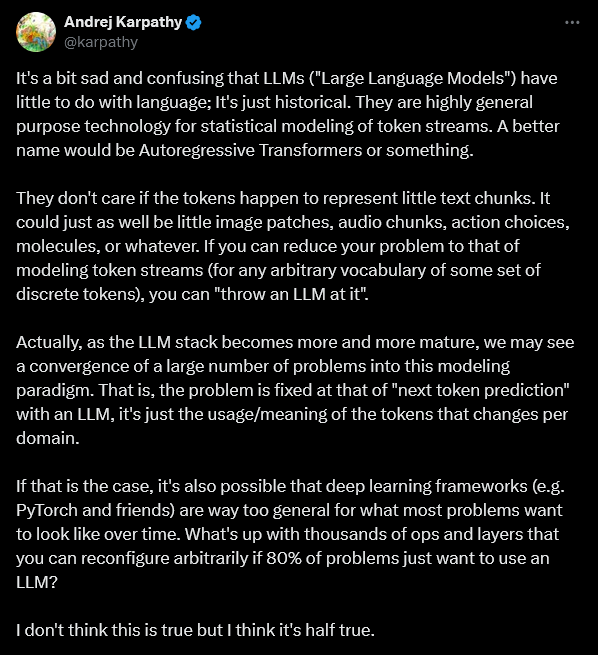

Karpathy wrote a tweet about how large language models was a wrong name(or half wrong) for the new AI models.

I disagree.

Actually, what has happened is that these LLM’s have actually now proved that almost everything is a “language”.

"language" in the name works out perfectly. Just that instead of English or some other language, we have the language of math, language of music or language of some other domain.

So a large “language” model can learn any “language”. The same architecture works. Only the domain varies.

If I extend this, let us see what we mean by an expert. An expert in finance is nothing but a person who knows the vocabulary of finance. A person who knows the language of finance and can think in finance is an expert. So that means we can train a LLM in the language of finance.

Karpathy actually got the gist right. Which says that everything can be modeled by an LLM. But he says language is not the right word. I am just saying that language is the perfect word. It’s the reverse. In the real world we have to think of everything in terms of language.

You want to become an expert at math? Learn the language of math. Want to become an expert calculus? Learn the language of calculus. Which just means learn and understand the new vocabulary.

I have taken this approach and created a small course for calculus. try it out and see how it works.