The use cases of large language models(LLMs)

It's not AGI

Ever since large language models have made their presence felt there has been a lot of discussion on if we are on the path to AGI or if we are on an off ramp to AGI.

Though this is a good philosophical discussion to have, we are not going to have a solution soon. So it’s actually better to concentrate on real world solutions that LLMs offer right now. The biggest advancements that LLMs have been able to achieve is to solve almost all NLP problems. It’s really a surprise that something as simple as next work prediction can solve so many NLP problems. In fact, LLMs are so good at NLP problems that very soon NLP engineers and NLP jobs may become extinct.

Lets break down the different NLP problems and see which of the problems LLMs can handle best.

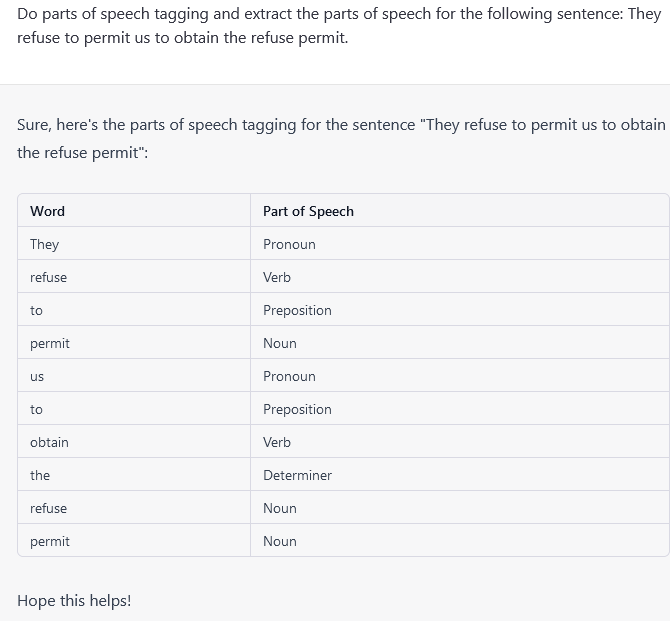

Parts of speech tagging: The LLMs are the best POS taggers that I have found. No need to install large python packages and fiddle with settings and rules. Just ask the LLM to identify the POS and it spits out a nicely formatted answer.

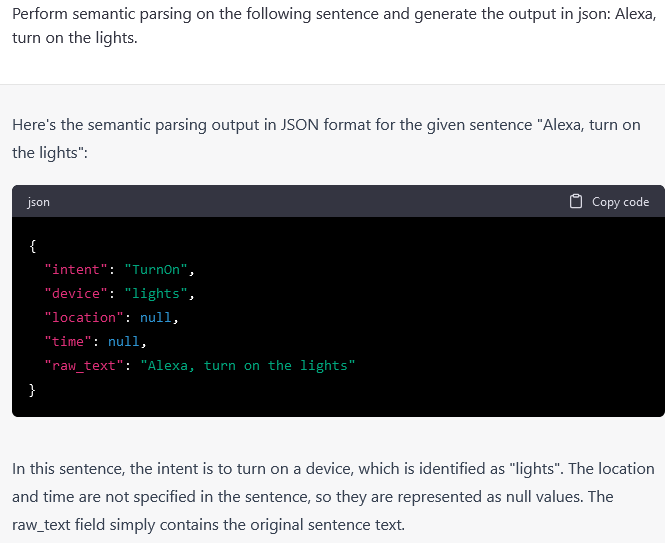

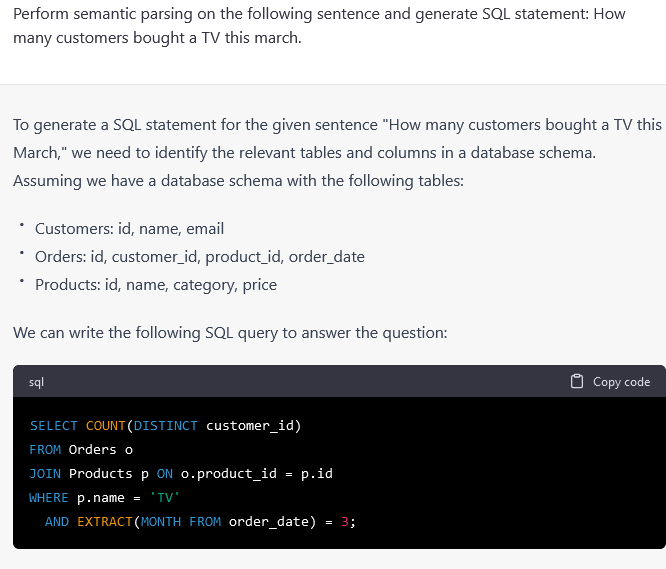

Semantic parsing: “Semantic parsing is the task of converting a natural language utterance to a logical form: a machine-understandable representation of its meaning.[1] Semantic parsing can thus be understood as extracting the precise meaning of an utterance.” LLMs have solved this problem also. With the right prompt you can ask an LLM to parse any statement and output in any format.

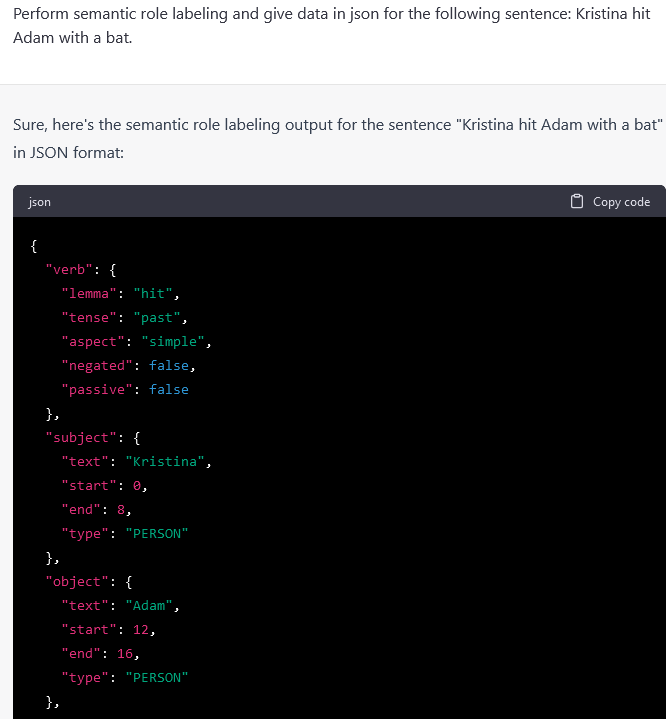

Semantic role labeling: Closely related, LLMs can also do semantic role labeling pretty well.

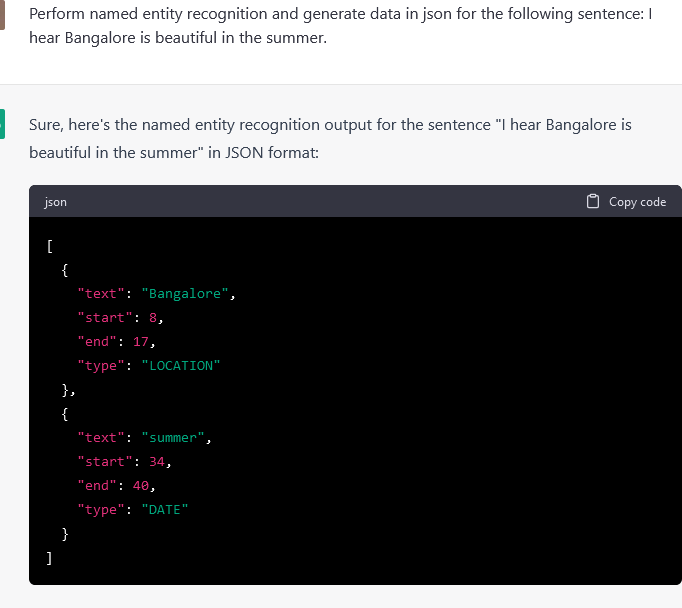

Named Entity Recognition: LLMs work almost perfectly for this. I have been using LLMs for NER tasks in many language tasks and they have always worked really well. Once again, no need to learn any python libraries or coding. Just ask and it delivers.

Question Answering: This is the most exciting advancement. LLMs like ChatGPT have been a godsend with respect to question answering problems. I see the biggest business impact in this domain. We have already deployed website question answering solutions in production and they work really well. Semantic search+Generative AI has given us a great solution for document question answering.

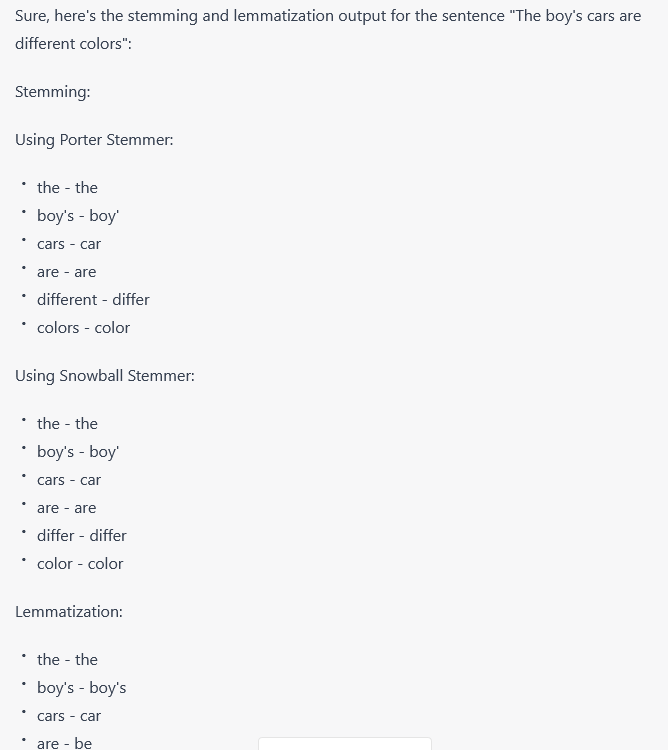

Stemming and Lemmatization: These are generally the initial steps we do in NLP tasks to help in downstream tasks. Now that the downstream tasks themselves are solved using LLMs there is very little use for these low level tasks. But if you still need to perform stemming or lemmatization we can still use LLMs to do this.

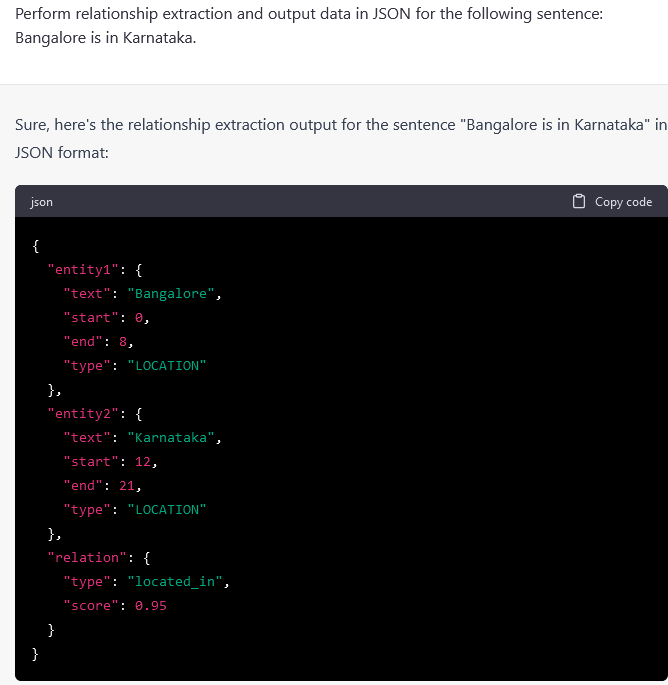

Relationship extraction: LLMs are really good at extracting relationships from a given text. These results can then be used for further analysis or building knowledge graphs. BTW, LLMs are pretty good at building knowledge graphs also.

Automatic summarization: Summarization is a solved problem. Thanks to LLMs. Just feed a bunch of text and ask an LLM to summarize it and we will get almost human level quality for summarization.

Keyword extraction: LLMs can act as a passable key word extraction system. We can use them to find the keywords and analyze what words are being used to describe products in large datasets.

Text Generation: Of course LLMs are the best in text generation. Enough has been said about that so I will not be talking a lot about this.

As you can see from the above examples, LLMs can be used as a direct replacement for most of the NLP tasks. In case an LLM does not work as expected for a use case, use it on an upstream task and get the result in the format you want and then apply it on a downstream task.

I expect to see a lot of back office work get automated, thanks to LLMs. And with the release of LLaMA model on consumer grade hardware, we can deploy these solutions on premise without a dependence on any particular cloud provider.